By Dr. Kaśka Porayska-Pomsta

Associate Professor of Adaptive Technologies for Learning and an RCUK Academic Fellow at the UCL Knowledge Lab

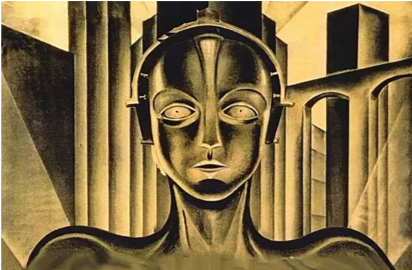

Artificial Intelligence (AI) gets quite a lot of bad press. It is understood by few and feared by many. It is overhyped through media, sci-fi movies and eye-watering financial investments by the tech giants and increasingly – governments. As such AI does not yet sit comfortably with many who care about human development and wellbeing, or social justice and prosperity. This feeling of discomfort is fueled by increasing reports that so far, AI-enhanced human decision-making can amplify social inequalities and injustice, rather than address them. This is because AI models are based on data which harbour our (Human) biases and prejudices (e.g. racial discrimination in policing). The bad press is not helped by the growing perception that AI is somewhat a mysterious area of knowledge and skill – a tool mastered by a few initiated technological ‘high priests’ (mainly white male engineers) to spy on us, to take our jobs from us, and to control us.

Artificial Intelligence (AI) gets quite a lot of bad press. It is understood by few and feared by many. It is overhyped through media, sci-fi movies and eye-watering financial investments by the tech giants and increasingly – governments. As such AI does not yet sit comfortably with many who care about human development and wellbeing, or social justice and prosperity. This feeling of discomfort is fueled by increasing reports that so far, AI-enhanced human decision-making can amplify social inequalities and injustice, rather than address them. This is because AI models are based on data which harbour our (Human) biases and prejudices (e.g. racial discrimination in policing). The bad press is not helped by the growing perception that AI is somewhat a mysterious area of knowledge and skill – a tool mastered by a few initiated technological ‘high priests’ (mainly white male engineers) to spy on us, to take our jobs from us, and to control us.

In this context, it is not surprising that many non-specialists in AI, whose practices (and jobs) may be affected by its use, have at the very best, a lukewarm, skeptical attitude towards AI’s potential to provide a mechanism for a positive social change, and at the very worst, they vehemently oppose it. In short, for many people, the jury is still out on the extent and the exact nature of AI’s ability to work for the social benefit of all.

AI is not the same as HI

Given the emergent evidence of AI’s present potential to reinforce rather than narrow the existing disparities in our societies, does it make any sense to even consider AI as a potential tool for enhancing social benefit?

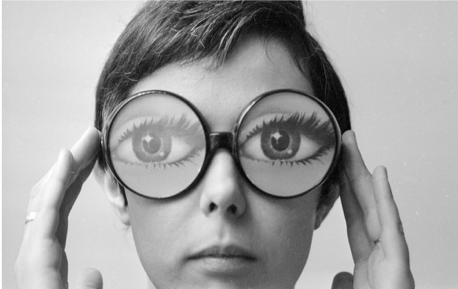

For many of us who research at the intersection of AI and the Social Sciences (http://iaied.org/about/; UCL KL), the answer is a resounding ‘yes’. The sheer revelation that comes with AI having already exposed a shockingly biased basis of our own decisions in many high-stakes contexts, such as law reinforcement, is a prime example of how powerful a mirror onto ourselves this technology can be. Already AI and ethics movement is so strong around the world as a consequence of this exposure, that governments as well as the technological giants are unable to ignore it. Some AI-for-social-good activists raise pertinent questions about what steps we can take as a people towards creating fairer and more inclusive world for ourselves, and what exactly we can and should demand from AI and its creators. Diversity in data used as the basis for the AI models, diversification of AI engineering workforce (e.g. to infiltrate the AI solutions with the perspectives of women, ethnic minorities, or the so-called neuro-atypical groups), as well as active participation of the wider public, social scientists and public practitioners, e.g. teachers, in the design, implementation and interrogation of AI technologies, have been so far identified as key to addressing the present concerns.

For many of us who research at the intersection of AI and the Social Sciences (http://iaied.org/about/; UCL KL), the answer is a resounding ‘yes’. The sheer revelation that comes with AI having already exposed a shockingly biased basis of our own decisions in many high-stakes contexts, such as law reinforcement, is a prime example of how powerful a mirror onto ourselves this technology can be. Already AI and ethics movement is so strong around the world as a consequence of this exposure, that governments as well as the technological giants are unable to ignore it. Some AI-for-social-good activists raise pertinent questions about what steps we can take as a people towards creating fairer and more inclusive world for ourselves, and what exactly we can and should demand from AI and its creators. Diversity in data used as the basis for the AI models, diversification of AI engineering workforce (e.g. to infiltrate the AI solutions with the perspectives of women, ethnic minorities, or the so-called neuro-atypical groups), as well as active participation of the wider public, social scientists and public practitioners, e.g. teachers, in the design, implementation and interrogation of AI technologies, have been so far identified as key to addressing the present concerns.

Clearly, what we are seeing is that like other technologies before it, AI is not a magic bullet to cure our ills. Rather it is an instrument which may or may not serve our interests, but for whose exact design and use we are all responsible.

The first step to widening public and inter-sector participation in developing and using AI for social benefit is to de-sensationalise it. In particular, it is critical that AI seizes to be presented and perceived as an exact copy of ourselves. It is not and will never be so! This is a good thing and in fact, in the context of social inclusion and education, this difference between Artificial Intelligence and Human Intelligence (HI) can, for example, provide a useful means for easing social anxiety and improving self-efficacy for those who may normally find human contexts overpowering and confusing, e.g. in the context autism.

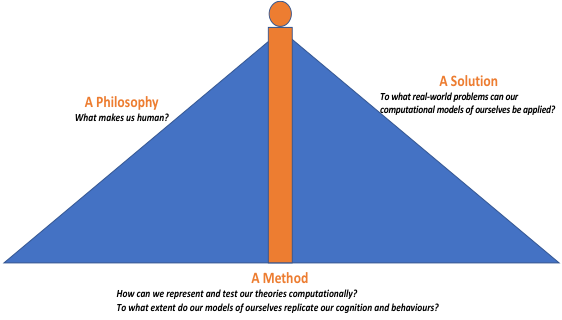

From its early days and by necessity owing to the need for computational efficiency (Russell and Norvig, 2003), AI was not expected to be an exact replica of HI, even if the aim has always been to create AI systems that act like us, insofar as their ability to emulate autonomous decision-making, given some well-defined goals, pre-defined measures of success, and a set of constraints to characterise a given environment in which those goals must be achieved. Thus, the original conception of AI was, and for many of us still remains:

From its early days and by necessity owing to the need for computational efficiency (Russell and Norvig, 2003), AI was not expected to be an exact replica of HI, even if the aim has always been to create AI systems that act like us, insofar as their ability to emulate autonomous decision-making, given some well-defined goals, pre-defined measures of success, and a set of constraints to characterise a given environment in which those goals must be achieved. Thus, the original conception of AI was, and for many of us still remains:

First and foremost, as applied philosophy which helps us formulate and unpack key questions about different aspects of (human) intelligence;

Second, as a method for addressing those questions through practicing and testing the different theories by operationalising them through computer systems that produce observable and measurable behaviors;

Third, as a solution which may be potentially game changing for us (https://deepmind.com/applied/deepmind-health/), but which nevertheless is an artefact of our questioning and experimentation based on the current (sic incomplete) state of our knowledge and understanding.

With AI having now crossed over from a purely scientific domain to practical mainstream applications, AI as a solution has, arguably, become the most visible in the wider public domain. While fueling plenty of interest and investment, this limited view of AI, coupled with the confusion about its seeming proximity to our own cognition and behaviors, obscures a more measured and productive view on the full potential of AI in helping us become more tolerant, inclusive, and empowering of each other.

Through the looking glass

Thus, to appreciate the role that AI can play in supporting inclusion, it is important to see it in terms of the interplay between it as a domain of and a tool for enquiry into what makes us human (AI as a philosophy and a method of enquiry) and the way we, as a society, may want to design and deploy it for human benefit, including for helping us become more inclusive (AI as a solution).

This is useful to our being able to acknowledge that, like in any other scientific domain, both the questions and the answers that we are able to formulate with the help of AI are relative to the current state of our knowledge and social aspirations. This is of particular relevance to inclusion, because it helps us approach inclusion and education not as some fixed state for which there is a set of equally fixed solutions that can be administered to us like medicine, but rather as a social process and a state of mind, which crucially requires our own investment, enquiry and willingness to change. In this case, and different from other scientific domains, AI can uniquely provide the intellectual and physical means that are also concrete an scalable, for us to explore and speculate (e.g. through simulated scenarios) about what it means to be inclusive along with the mechanisms that may be conducive and effective for fostering inclusion.

This is useful to our being able to acknowledge that, like in any other scientific domain, both the questions and the answers that we are able to formulate with the help of AI are relative to the current state of our knowledge and social aspirations. This is of particular relevance to inclusion, because it helps us approach inclusion and education not as some fixed state for which there is a set of equally fixed solutions that can be administered to us like medicine, but rather as a social process and a state of mind, which crucially requires our own investment, enquiry and willingness to change. In this case, and different from other scientific domains, AI can uniquely provide the intellectual and physical means that are also concrete an scalable, for us to explore and speculate (e.g. through simulated scenarios) about what it means to be inclusive along with the mechanisms that may be conducive and effective for fostering inclusion.

AI in service of inclusion

Inclusion, as one aspect of social welfare, fundamentally rests first on people’s ability to understand, regulate and accept themselves, and second, on their willingness to understand, and on their ability to access other people’s experiences and points of view.

Research tells us that the ability to question and to know oneself (also sometimes referred to as metacognitive competencies) is instrumental to the formation of self-identity, critical thinking, moral values and ultimately, to self-efficacy and self-determination, thus, broadly speaking, to our individual empowerment. Such competencies are at the core of our executive functions, which are responsible for our ability to plan, predict, strategise, and learn. They are fundamental to our individual and social functioning, and wellbeing. On the other hand, the ability to entertain other people’s perspectives is critical to developing a sense of justice and social responsibility that provides the foundations for any democratic, tolerant and inclusive society, and in the case of educational practitioners – it provides the basis for tailored and informed interventions that cater equally well for both typical and so-called atypical learners.

However, understanding oneself, let alone another person is very hard to achieve, because:

- What we are trying to understand lies in the sphere of a subjective and fleeting experiences that further reside in our respective subjective realities, which are by definition hard to capture, revisit and evaluate against those of other people;

- In order to fully understand one’s experiences and judgements, they need to be articulated and exercised through verbalisation and this can be very hard to do because of (i) and because, often, we do not actually fully know what our experiences and judgements are, especially if they are emotionally loaded;

- Willingness to communicate our experiences and judgements, even privately to ourselves, let alone to others, requires the right kind of nurturing, safe environment in which people will feel safe and motivated to engage in such self-and mutual- examination and expression. Human environments are notoriously inconsistent, often judgemental and ridden with complex social power-inflected relations, which often negate the sense of safety and equality, especially for those who are defined or who define themselves by their relative difference to the so-called ‘norm’;

- Even if all things are equal and experiences and judgements can be articulated, their interpretation by others, even those with the best intentions, is often hindered by their own experiences, judgements and agendas (sic biases).

AI beyond the bias

This is where AI can actually help. Here is how:

AI as a stepping stone. AI-driven environments are very good at providing situated, repeatable experiences to its users, offering an element of predictability and a sense of safety, while creating an impression of credible social interactions, for example through adapting their feedback and reactions to the actions of the users. This is important, in contexts where the users may suffer from social anxiety, e.g. autism, or who lack self-efficacy and self-confidence, e.g. as is experienced by many learners at some point in their studies. Approaches such as adopted in the ECHOES project, where young children with autism interact in an exploratory way with an AI agent as a social partner, provide an example of how AI can be used to create an adaptive environment in which the child is in charge of the interaction and where both their difference from the ‘norm’ and the anxiety of failure are removed. Here, the fact that AI is not the same as a Human is key, because it allows the children to get used to the different social scenarios, with the agent providing consistent, but not fully predictable interaction partnership and where the level of support given by the AI agent can be moderated dynamically based on individual children’s needs moment-by-moment.

AI as a stepping stone. AI-driven environments are very good at providing situated, repeatable experiences to its users, offering an element of predictability and a sense of safety, while creating an impression of credible social interactions, for example through adapting their feedback and reactions to the actions of the users. This is important, in contexts where the users may suffer from social anxiety, e.g. autism, or who lack self-efficacy and self-confidence, e.g. as is experienced by many learners at some point in their studies. Approaches such as adopted in the ECHOES project, where young children with autism interact in an exploratory way with an AI agent as a social partner, provide an example of how AI can be used to create an adaptive environment in which the child is in charge of the interaction and where both their difference from the ‘norm’ and the anxiety of failure are removed. Here, the fact that AI is not the same as a Human is key, because it allows the children to get used to the different social scenarios, with the agent providing consistent, but not fully predictable interaction partnership and where the level of support given by the AI agent can be moderated dynamically based on individual children’s needs moment-by-moment.

AI as a mirror. AI operates on precise data and this means that it is also able to offer us a precision of judgement and recall of events that is virtually impossible to achieve in human contexts. With respect to inclusion, provided that there is a possibility of come-back from the human, this can be very valuable, even if in all its precision, AI does not necessarily offer us the truth. Educational AI-based systems, which employ the so-called open learner models (OLMs) show how users’ self-awareness, self-regulation and ultimately self-efficacy can be supported by allowing them to access, interrogate, and even change (through negotiation with the AI system) the data generated of them during their learning with the system. For example, the TARDIS project, successfully used the OLM approach to provide young people at risk of exclusion from education, employment or training with insight into their social interaction skills in job interview settings and with strategies for improving those skills. Here, data about the young people’s observable behaviors is first gathered and interpreted during interactions with AI agents acting as job recruiters. This data, which relates to the quality of users’ specific verbal (e.g. length of answer to an interview questions) and non-verbal behaviors (e.g. facial expressions) is then used to provide the basis for detailed inspection, aided by a human coach, of the users’ strengths and weaknesses in their job interview performance, and for developing a set of strategies for self-monitoring and self-regulation during further interviews.

AI as a medium. Just as AI systems, such as those based on OLMs, can support the development of self-understanding and self-regulation, they can also provide unique and unprecedented insight for others (teachers, parents, peers). Gaining such a perspective can be game changing for the inclusion practices and individual support interventions employed, because they can reveal learners’ behaviors and abilities that might be hard to observe or foster in traditional environments, e.g. in ECHOES some children who were thought to be uncommunicative, became motivated to communicate within the AI environment, giving the teachers an idea of their real potential and changing the way in which they were supported in other contexts. Thus, the potential of AI as a medium is not merely in the data and its classification that it is able to produce, but rather in the way that it provokes human reflection, interaction and adaptation of the existing points of view and practices.

AI as a time machine. AI environments and methods offer an opportunity to experiment with different scenarios and with our knowledge in ways that allows us to experience those scenarios and observe the consequences of our explorations in the physical world, rather than theoretically. In turn, this provides us all with an unprecedented opportunity for using AI not only as way to revisit past scenarios, but also to play out and explore future possible outcomes. While this is already possible to some extent, for example in systems which allow students to learn by teaching the AI (e.g. Betty’s Brain), using AI for exploring future scenarios brings a new possible dimension not only in the context of helping learners in developing key executive functions such as planning and strategising, but also to developing immersive, highly personalized and personal environments with potential mental health interventions as well as preventative practices for promoting well-being from early ages.

References

Russell, S. J., & Norvig, P. (2003). Artificial intelligence: A modern approach (2nd ed.). Upper Saddle River, N.J. ; New Delhi: Prentice Hall/Pearson Education.